With the rise of text generative AI tools, we need to re-evaluate traditional assessment methods and develop strategies to prevent the misuse of AI tools.

Conventional assessment strategies like exams, essays and multiple-choice questions have traditionally been the fundamental means of assessing students’ academic performance (Mislevy et al., 2012). However, the rise of text generative artificial intelligence tools like ChatGPT requires re-evaluating of these traditional assessment methods in higher education. While technology can significantly enhance the learning process, it also introduces the risk of undermining academic integrity. Universities must grapple with this challenge by developing strategies to prevent the misuse of AI tools. Recent studies have indicated a shift toward continuous, authentic, and adaptive assessments (Swiecki et al., 2022).

Additionally, when reconsidering assessment methods, it is vital to consider the diverse needs of neurodivergent students. Neurodiversity, as defined by the New Zealand Ministry of Education, encompasses a wide range of conditions, including dyslexia, dyspraxia, dyscalculia, dysgraphia, autism spectrum disorder, fetal alcohol spectrum disorder, attention deficit/hyperactivity disorder, trauma-related disorders, and auditory-visual processing disorders. This term covers learning needs across a spectrum of intensity, including those who are 'twice-exceptional,' meaning they exhibit more than one identifiable set of symptoms (Mirfin-Veitch & Jalo, 2020).

However, it's essential to avoid making broad assumptions about the types of assessments suitable for specific groups of learners. The goal is to provide a diversified range of assessment types throughout a program of study that caters to all students' needs, irrespective of their disabilities (Hamilton & Petty, 2023). The strategies presented here broadly meet these needs, but some of the examples might appear daunting or even opaque to individual neurodivergent students, particularly those with challenges around central coherence, executive function, or social anxiety. To address this, additional examples have been added where required. In the transition to practice, teaching staff will be offered advice or professional development on structuring and explaining the assessment tasks appropriately.

Here are our top 10 strategies for redesigning assessment tasks to mitigate the misuse of generative AI tools, complete with practical examples. To optimise the educational benefits of these strategies, we have aligned these with relevant Charles Sturt policies and guidelines.

Shift the focus from grades to learning outcomes. Encourage students to view assessments as opportunities for growth rather than just a means to achieve high scores (Richardson, 2023).

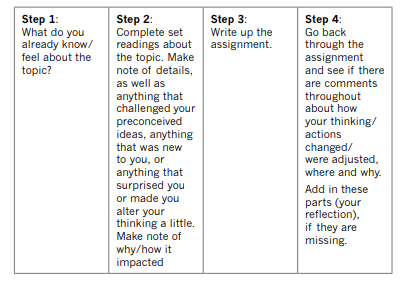

This process-centred approach focuses on assessing students' actions and tactics throughout their learning journey, which helps improve critical thinking and self-reflection skills.

Design assessments that require students to apply their knowledge to contemporary real-world scenarios or problems.

Authentic assessments are more meaningful to students and demonstrate their ability to apply knowledge in practical situations. Authentic assessments are less susceptible to cheating because they test students' understanding rather than their ability to look up answers (Sotiriadou et al., 2020).

To learn more about developing authentic assessments, see these resources:

Design assessment tasks or questions that encourage critical thinking and problem-solving. Ask students to analyse information, draw connections, evaluate arguments, or propose solutions (Saroyan, 2022).

Encourage critical thinking and problem-solving, which reduce the misuse of generative AI tools.

Create assessments with unique parameters for each student. This could involve student input or choice in developing the assessment topics.

This will allow students to be actively involved in the assessment process and help them learn more about assessment tasks and understand its importance in their own learning (Keppel, 2014).

Consider conducting live or recorded interviews or oral presentations as assessments.

This makes it difficult for students to use external resources without being detected.

Oral assessments may provide their own unique set of challenges for neurodivergent students as they would need to deal with concurrent demands on cognitive, social and emotional processing (Hand, 2023) while assessing and maintaining a tone appropriate to the genre “without becoming inappropriately or overly formal, or worse, familiar” (McMahon-Coleman & Draisma, 2016). There is also some research about appropriately supporting neurodivergent students attempting viva voce. One study argued for more preparation with this cohort—explaining the scenarios, who will do what, and what kinds of questions are likely—as well as spending time considering examiners who were likely to take a compassionate approach (Sandiland, MacLeod, Hall, & Chown, 2022). This suggests that the advice around execution seems more important than the type of assessment.

Focus on questions that require students to apply knowledge in unique ways. This enquiry-based learning approach focuses on investigation and problem-solving (Deignan, 2009).

This makes it harder for students to find pre-written answers online or using text generative AI tools such as ChatGPT.

Incorporate peer evaluations where students assess each other's work.

This can help deter cheating, as students are less likely to cheat when assessing their peers' work. Peer assessment encourages active learning and students gain a deeper understanding of the subject matter and learning objectives. By assessing the work of their peers, students often engage in self-reflection and self-assessment. Peer assessment can also save instructors time in grading, allowing them to focus on other teaching aspects and providing students with more timely feedback (Hauff & Nilsson, 2022).

It can also work well for neurodivergent students if the task is well structured. Teaching staff will need to think about how the standards are set and benchmarked - a neurotypical and a non-neurotypical student could have very different ideas about what kind of feedback is encouraging or how harshly to review.

Implement a system of frequent, low-stakes assessments throughout the teaching period.

This can reduce the temptation to cheat on high-stakes assessments, as the overall grade is distributed across multiple assessments. Some obvious benefits include continuous feedback, active engagement, motivation and reduced test/exam anxiety (Warnock, 2013). Ensure that such tasks' development is aligned with the Guidelines for Calibrating Student Workload.

This strategy is also considered the gold standard in inclusive education — checking students’ learning before the caravan moves on. Anonymous unmarked polls can be used in class as an example of setting up this practice.

Integrate tasks that demand creative problem-solving abilities by involving students in practical or hands-on projects that necessitate the creation of inventive solutions and unconventional thinking (Cardamone, 2023).

Such measures help build skills that are hard to replicate by AI tools.

Establish links between the curriculum and present-day situations, emphasising practical relevance and reconnecting with the human aspect of education, something generative AI tools can’t handle very well. Create avenues for students to interact with experts in relevant fields by conducting informational interviews, engaging in work-integrated learning, and gaining firsthand experiences. Inviting industry professionals into the classroom to impart their knowledge, real-life encounters, and viewpoints fosters a lively exchange of thoughts and perspectives.

As students apply their understanding in real-life scenarios, they cultivate competencies beyond AI tools' capabilities to reproduce (Montagnino, 2023).

Material for this content has been drawn from:

Cardamone, C. (2023). Thinking about our Assessments in the Age of Artificial Intelligence (AI). Teaching@Tufts.

Deignan, T. (2009). Enquiry-Based Learning: perspectives on practice. Teaching in Higher Education, 14(1), 13-28, https://doi.org/10.1080/13562510802602467

Draisma, K., & McMahon-Coleman, K. (2016). Teaching University Students with Autism Spectrum Disorder. Jessica Kingsley Publishers.

Hamilton, L. G., & Petty, S. (2023). Compassionate Pedagogy for Neurodiversity in Higher education: a Conceptual Analysis. Frontiers in Psychology, 14. https://doi.org/10.3389/fpsyg.2023.1093290

Hand, C. J. (2023). Neurodiverse undergraduate psychology students’ experiences of presentations in education and employment. Journal of Applied Research in Higher Education. https://doi.org/10.1108/jarhe-03-2022-0106

Carlsson Hauff, J., & Nilsson, J. (2021). Students’ experience of making and receiving peer assessment: the effect of self-assessed knowledge and trust.

Assessment & Evaluation in Higher Education, 1–13. https://doi.org/10.1080/02602938.2021.1970713

Keppell, M. (2014). Personalised Learning Strategies for Higher Education. International Perspectives on Higher Education Research, 3–21. https://doi.org/10.1108/s1479-362820140000012001

Kifle, T. (2023). Assessments tasks that minimise students’ motivation to chat. Times Higher Education. London, UK.

Mirfin-Veitch, B., & Jalo, N. (2020). Responding to neurodiversity in the education context: An integrative literature review. Dunedin: Donald Beasley Institute.

Mislevy, R. J., Behrens, J. T., Dicerbo, K. E., & Levy, R. (2012). Design and discovery in educational assessment: Evidence-centred design, psychometrics, and educational data mining. Journal of Educational Data Mining, 4(1), 11–48

Montagnino, C. (2023). Six Ways to Maximize Authentic Learning in the AI Era. Fierce Education. https://www.fierceeducation.com/student-engagement/six-ways-maximize-authentic-learning-ai-era